Artificial Intelligence (AI) is revolutionizing industries by providing intelligent solutions to complex problems. OpenAI has been a significant player in this space, but their APIs can be costly, especially for startups and small businesses. Fortunately, there are alternatives like Ollama AI that offer powerful language models without the hefty price tag. This article explores how to set up and run Ollama AI on a Windows local machine.

Understanding Ollama AI

Ollama AI is an emerging platform that provides advanced language models for various applications, such as natural language processing (NLP), text generation, and machine learning. These models are designed to be highly efficient and effective, catering to different needs without the associated costs of some other well-known APIs.

Why Choose Ollama AI?

- Cost-Effective: Ollama AI offers free access to their language models, making it an attractive option for businesses with tight budgets.

- Versatility: The platform supports a wide range of applications, from chatbots to content creation and more.

- Ease of Use: Ollama AI provides user-friendly interfaces and comprehensive documentation, ensuring a smooth integration process.

Installing Ollama

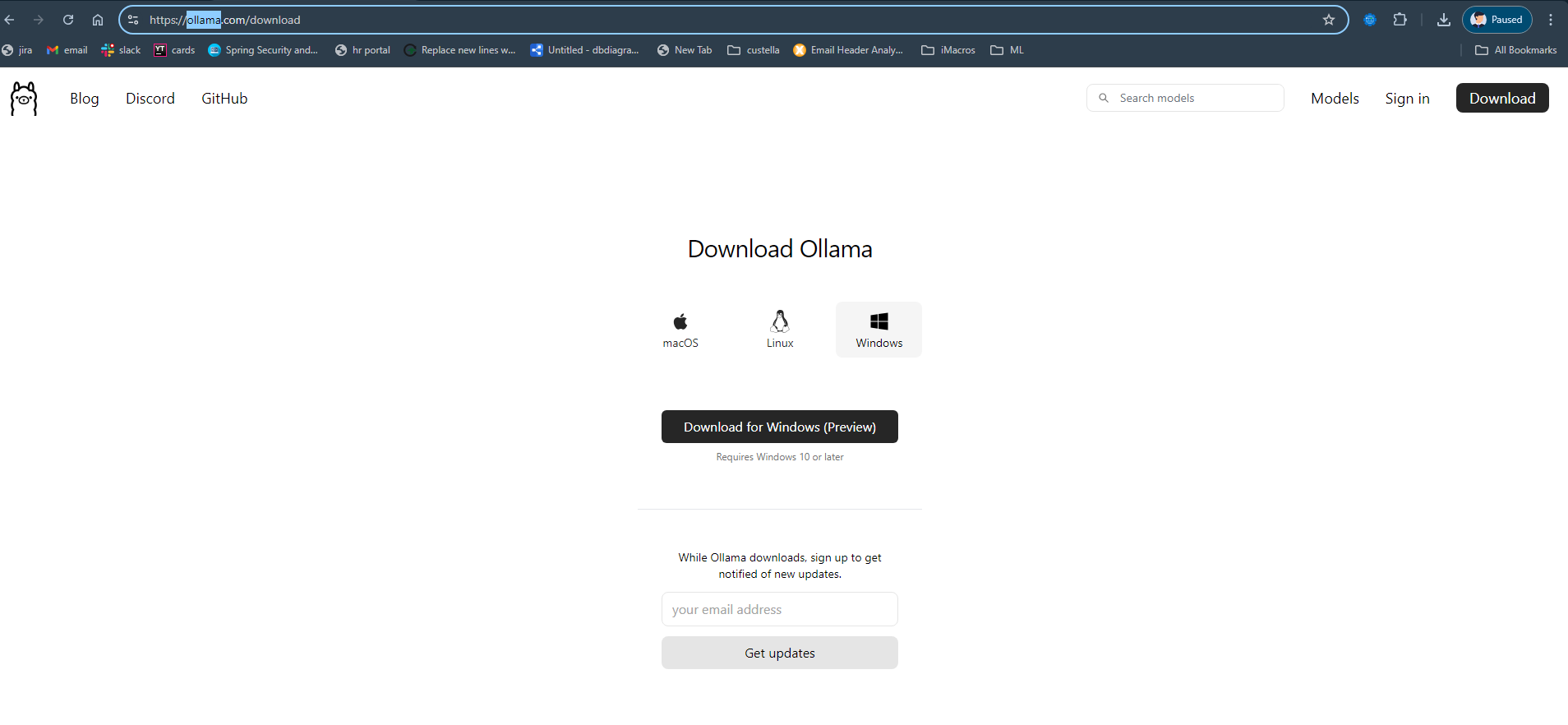

Visit Ollama website and download the Ollama package based on your operating system https://ollama.com

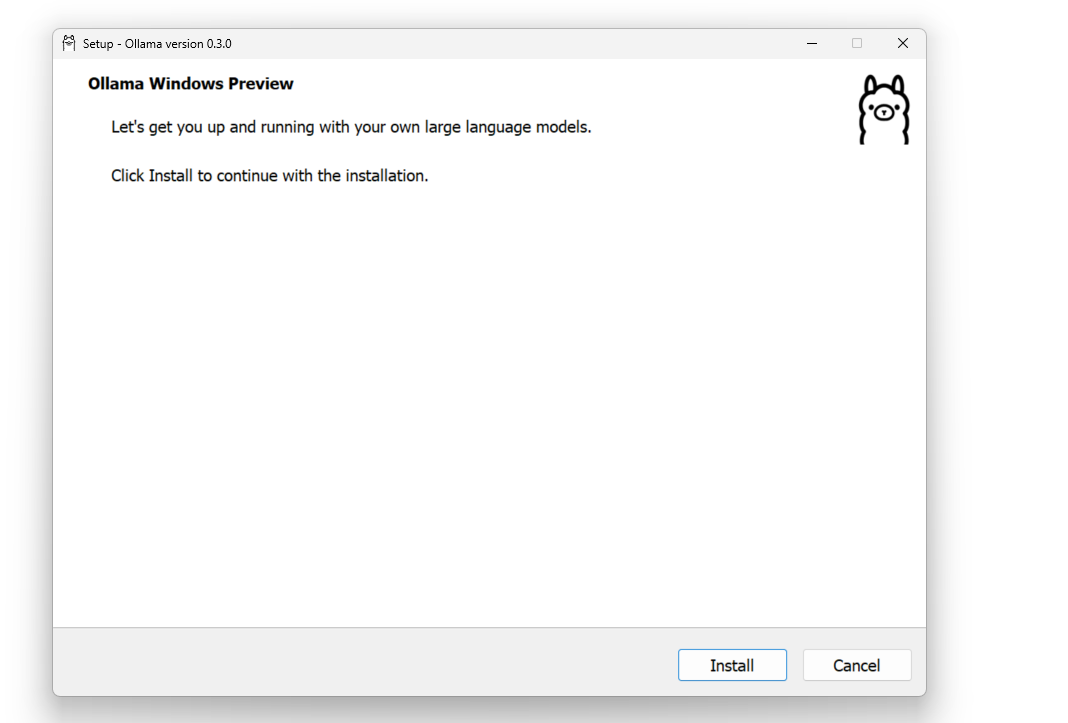

After downloading the installer (In this example I am using windows installer), Install following the steps on installer

Congratulations! You’ve successfully installed and set up Ollama on your computer.

Now, you can explore and run any of the free models available on Ollama. In this example, we’ll use LLaMA 3.

LLaMA 3 (Large Language Model Meta AI 3) is the latest development in Meta’s (formerly Facebook) series of AI language models. Building on the successes of LLaMA 1 and LLaMA 2, LLaMA 3 offers advanced capabilities in natural language understanding and generation, pushing the boundaries of AI technology even further.

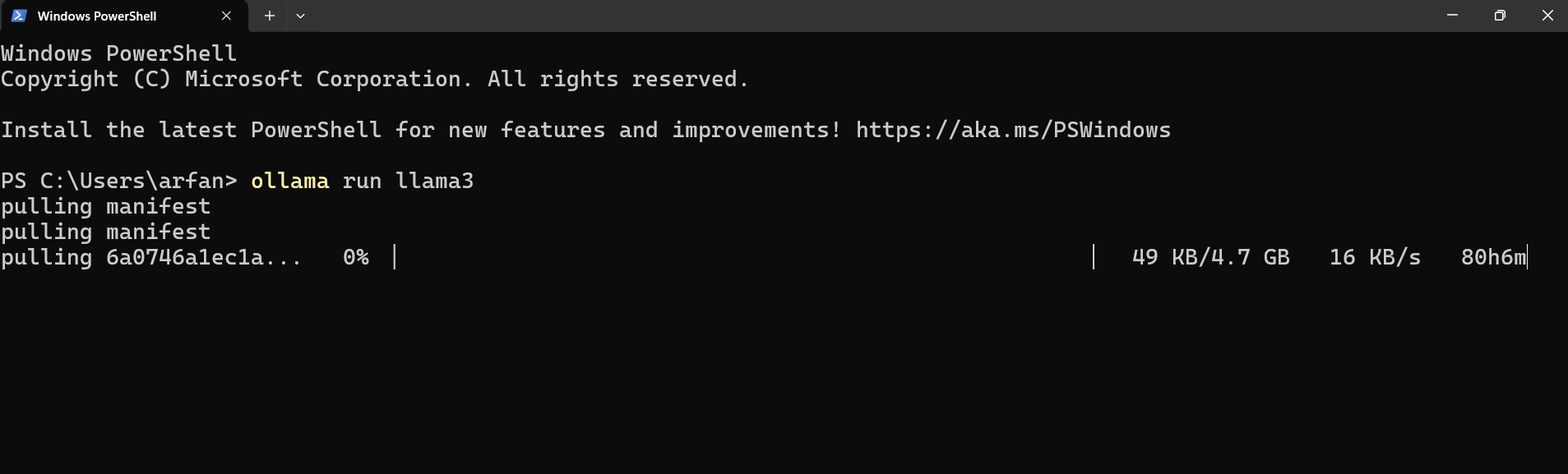

Ussing Ollama with Terminal

We can use terminal and execute the following command to start our desired model to be run at local macchine.

ollama run llama3

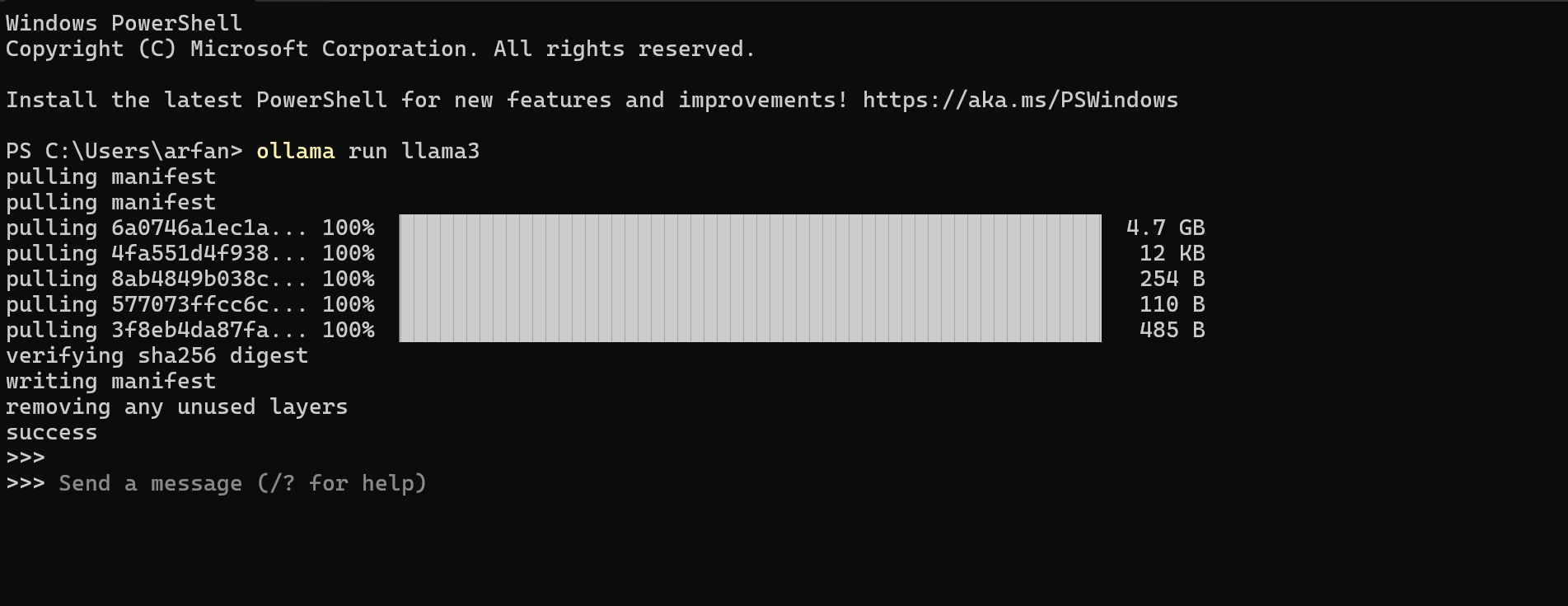

The command will try to find the model locally, and if it does not exist, it will download it from a remote source. This process is similar to how Docker images work.

Once the model is successfuly downlaoded, you will be able to interact with the terminal by fireing your queires

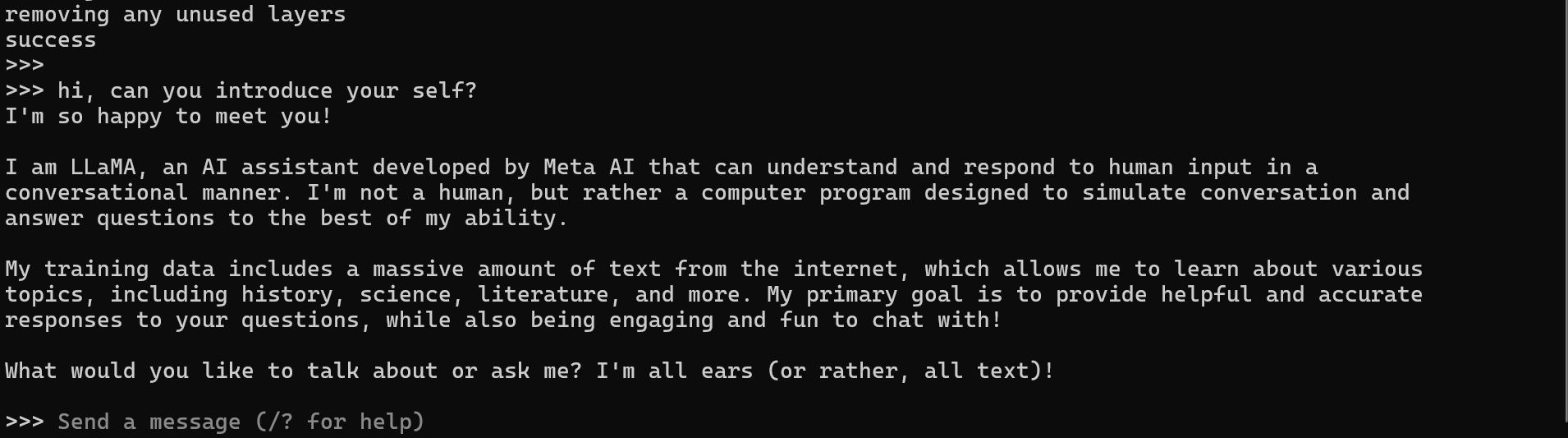

Example 1

>>> hi, can you introduce your self?

I'm so happy to meet you!

I am LLaMA, an AI assistant developed by Meta AI that can understand and respond to human input in a

conversational manner. I'm not a human, but rather a computer program designed to simulate conversation and

answer questions to the best of my ability.

My training data includes a massive amount of text from the internet, which allows me to learn about various

topics, including history, science, literature, and more. My primary goal is to provide helpful and accurate

responses to your questions, while also being engaging and fun to chat with!

What would you like to talk about or ask me? I'm all ears (or rather, all text)!

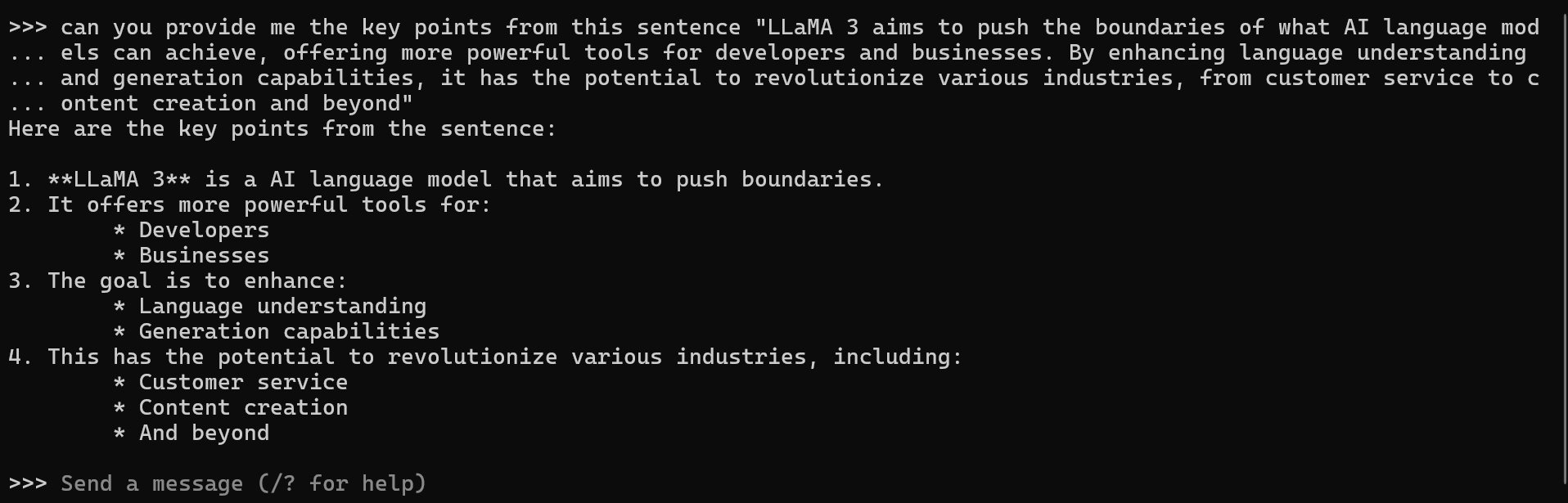

Example 2

>>> can you provide me the key points from this sentence "LLaMA 3 aims to push the boundaries of what AI language mod

... els can achieve, offering more powerful tools for developers and businesses. By enhancing language understanding

... and generation capabilities, it has the potential to revolutionize various industries, from customer service to c

... ontent creation and beyond"

Here are the key points from the sentence:

1. **LLaMA 3** is a AI language model that aims to push boundaries.

2. It offers more powerful tools for:

* Developers

* Businesses

3. The goal is to enhance:

* Language understanding

* Generation capabilities

4. This has the potential to revolutionize various industries, including:

* Customer service

* Content creation

* And beyond

>>> Send a message (/? for help)

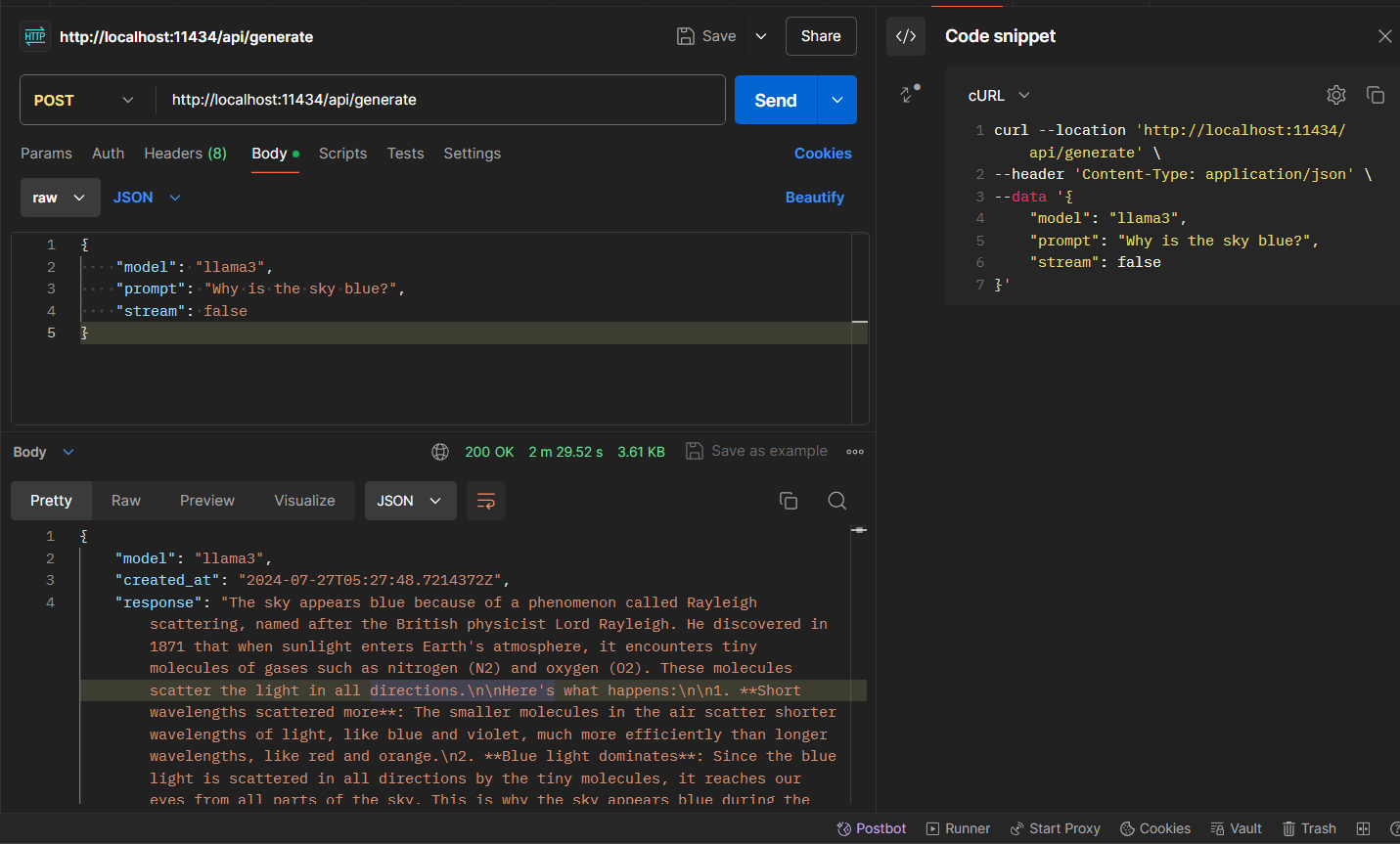

Using Ollama for Development

Plyaing with Ollama terminal is fun, but when it comes to real development we need our applications to interact with the AI models, for this reason, Ollama provides a handfull of APIs

The below sample illustrates how we can use postman to simulate an API call to the above LLAMA3 model

curl --location 'http://localhost:11434/api/generate' \

--header 'Content-Type: application/json' \

--data '{

"model": "llama3",

"prompt": "Why is the sky blue?",

"stream": false

}'

{

"model": "llama3",

"created_at": "2024-07-27T05:27:48.7214372Z",

"response": "The sky appears blue because of a phenomenon called Rayleigh scattering, named after the British physicist Lord Rayleigh. He discovered in 1871 that when sunlight enters Earth's atmosphere, it encounters tiny molecules of gases such as nitrogen (N2) and oxygen (O2). These molecules scatter the light in all directions.\n\nHere's what happens:\n\n1. **Short wavelengths scattered more**: The smaller molecules in the air scatter shorter wavelengths of light, like blue and violet, much more efficiently than longer wavelengths, like red and orange.\n2. **Blue light dominates**: Since the blue light is scattered in all directions by the tiny molecules, it reaches our eyes from all parts of the sky. This is why the sky appears blue during the daytime.\n3. **Red light travels straight**: The longer wavelengths of light, like red and orange, are not scattered as much and continue to travel in a more direct path to our eyes, which is why we see these colors during sunrise and sunset.\n\nThe color of the sky can also be influenced by:\n\n* Atmospheric conditions: Dust, pollution, and water vapor can scatter light in different ways, making the sky appear more hazy or gray.\n* Time of day: As mentioned earlier, the sky can take on a reddish hue during sunrise and sunset due to the scattering of longer wavelengths.\n* Altitude: At higher altitudes, the air is thinner, and there are fewer molecules to scatter light, resulting in a deeper blue color.\n\nSo, to summarize, the sky appears blue because the tiny molecules in our atmosphere scatter shorter wavelengths of sunlight, like blue and violet, more efficiently than longer wavelengths.",

"done": true,

"done_reason": "stop",

"context": [

128006,

882,

128007,

271,

10445,

374,

279,

13180,

6437,

30,

128009,

128006,

78191,

128007,

271,

791,

13180,

8111,

6437,

1606,

315,

264,

25885,

2663,

13558,

64069,

72916,

11,

7086,

1306,

279,

8013,

83323,

10425,

13558,

64069,

13,

1283,

11352,

304,

220,

9674,

16,

430,

994,

40120,

29933,

9420,

596,

16975,

11,

433,

35006,

13987,

35715,

315,

45612,

1778,

439,

47503,

320,

45,

17,

8,

323,

24463,

320,

46,

17,

570,

4314,

35715,

45577,

279,

3177,

304,

682,

18445,

382,

8586,

596,

1148,

8741,

1473,

16,

13,

3146,

12755,

93959,

38067,

810,

96618,

578,

9333,

35715,

304,

279,

3805,

45577,

24210,

93959,

315,

3177,

11,

1093,

6437,

323,

80836,

11,

1790,

810,

30820,

1109,

5129,

93959,

11,

1093,

2579,

323,

19087,

627,

17,

13,

3146,

10544,

3177,

83978,

96618,

8876,

279,

6437,

3177,

374,

38067,

304,

682,

18445,

555,

279,

13987,

35715,

11,

433,

25501,

1057,

6548,

505,

682,

5596,

315,

279,

13180,

13,

1115,

374,

3249,

279,

13180,

8111,

6437,

2391,

279,

62182,

627,

18,

13,

3146,

6161,

3177,

35292,

7833,

96618,

578,

5129,

93959,

315,

3177,

11,

1093,

2579,

323,

19087,

11,

527,

539,

38067,

439,

1790,

323,

3136,

311,

5944,

304,

264,

810,

2167,

1853,

311,

1057,

6548,

11,

902,

374,

3249,

584,

1518,

1521,

8146,

2391,

64919,

323,

44084,

382,

791,

1933,

315,

279,

13180,

649,

1101,

387,

28160,

555,

1473,

9,

87597,

4787,

25,

33093,

11,

25793,

11,

323,

3090,

38752,

649,

45577,

3177,

304,

2204,

5627,

11,

3339,

279,

13180,

5101,

810,

305,

13933,

477,

18004,

627,

9,

4212,

315,

1938,

25,

1666,

9932,

6931,

11,

279,

13180,

649,

1935,

389,

264,

63244,

819,

40140,

2391,

64919,

323,

44084,

4245,

311,

279,

72916,

315,

5129,

93959,

627,

9,

24610,

3993,

25,

2468,

5190,

4902,

21237,

11,

279,

3805,

374,

65355,

11,

323,

1070,

527,

17162,

35715,

311,

45577,

3177,

11,

13239,

304,

264,

19662,

6437,

1933,

382,

4516,

11,

311,

63179,

11,

279,

13180,

8111,

6437,

1606,

279,

13987,

35715,

304,

1057,

16975,

45577,

24210,

93959,

315,

40120,

11,

1093,

6437,

323,

80836,

11,

810,

30820,

1109,

5129,

93959,

13

],

"total_duration": 149495426500,

"load_duration": 7480182000,

"prompt_eval_count": 16,

"prompt_eval_duration": 3712846000,

"eval_count": 331,

"eval_duration": 138292982000

}

To learn and understand more about Ollama and APIS, you can refer to the official gihub documentation https://github.com/ollama/ollama/blob/main/docs/api.md

In our next post we will explore how to use Ollama models in our Backend Applications to generate usfull features. Happy coding!

Convert Legacy CCTV in to a Face Recognition System with InsightFace

Understanding Java Garbage Collection: A Guide to ZGC and Generational Mode

Recognizing When It’s Time to Move Beyond Amazon ElastiCache for Redis

GitHub Copilot’s New Upgrade Assistant for Java: A Game-Changer for Java Developers

Writing Clean Code in a Java Spring Boot Application: Best Practices, Dos, and Don’ts